Google Bots: What is it and How Does it Work?

Search engines are an essential part of internet activity. They help consumers locate information fast on the internet by searching through massive volumes of data and providing relevant results. It is possible to traverse the internet and get the information we require with search engines.

Search engine crawlers, often known as bots, are essential to the operation of search engines. These bots are automatic programs that crawl over online sites indefinitely, accumulating information that is then utilized to compile an index of the internet. When a website user submits a search query, the search engine uses this index to locate and provide relevant web pages to the user swiftly.

Search engine crawlers act as internet librarians, cataloguing content and making it easily available to users. These bots are necessary for search engines to function properly, making locating information online considerably more difficult. To fully grasp the relevance of search engines in our everyday lives, it is necessary to understand the role of search engine crawlers and how they work.

Read on as we will discuss what Google Bots are.

Table of Contents

What are Google Bots?

Google Bot is a search engine crawler or bot that Google uses to index web pages and gather information for search results. It is a programme that searches the internet indefinitely, identifying and indexing new websites.

When Google Bot comes across a new web page, it scans the text and follows any links to find more. It then saves information about the web page, such as its content, URL, and metadata, such as the title and description. This data is utilised to create a web index, which is subsequently used by Google’s search engines to provide search results.

There are various types of Google Bots, each with its function.

It has two versions:

Google Bot Desktop – the crawler that stimulates a user from a desktop

Google Bot Smartphone – crawler that stimulates users from mobile devices

These types are further divided into subtypes mainly:

Googlebot

This is the primary Google crawler responsible for indexing web pages for the search engine’s index.

Googlebot-Mobile

This bot is intended to crawl and index mobile-friendly pages, assisting Google in providing better search results for mobile visitors.

Googlebot-Image

This bot indexes photos online, allowing users to search for and locate photographs using Google’s image search tool.

Googlebot-News

This bot searches news websites and indexes the most recent news stories, ensuring that Google’s search results remain current.

Why Does Google Use Bots?

Google bots crawl and index web pages for a variety of purposes. One of the key reasons for this change is to increase the accuracy and relevancy of its search results. Google can ensure its search results are up to date and present visitors with the most relevant and valuable information by continually scanning and indexing new websites.

Google bots are also important in search engine optimisation (SEO). When Google indexes a website, it becomes more visible in search results, potentially driving additional visitors to the site. Website owners may boost their chances of ranking higher in search results and reaching a broader audience by optimising their website for search engines and ensuring that it is readily discoverable by Google’s bots.

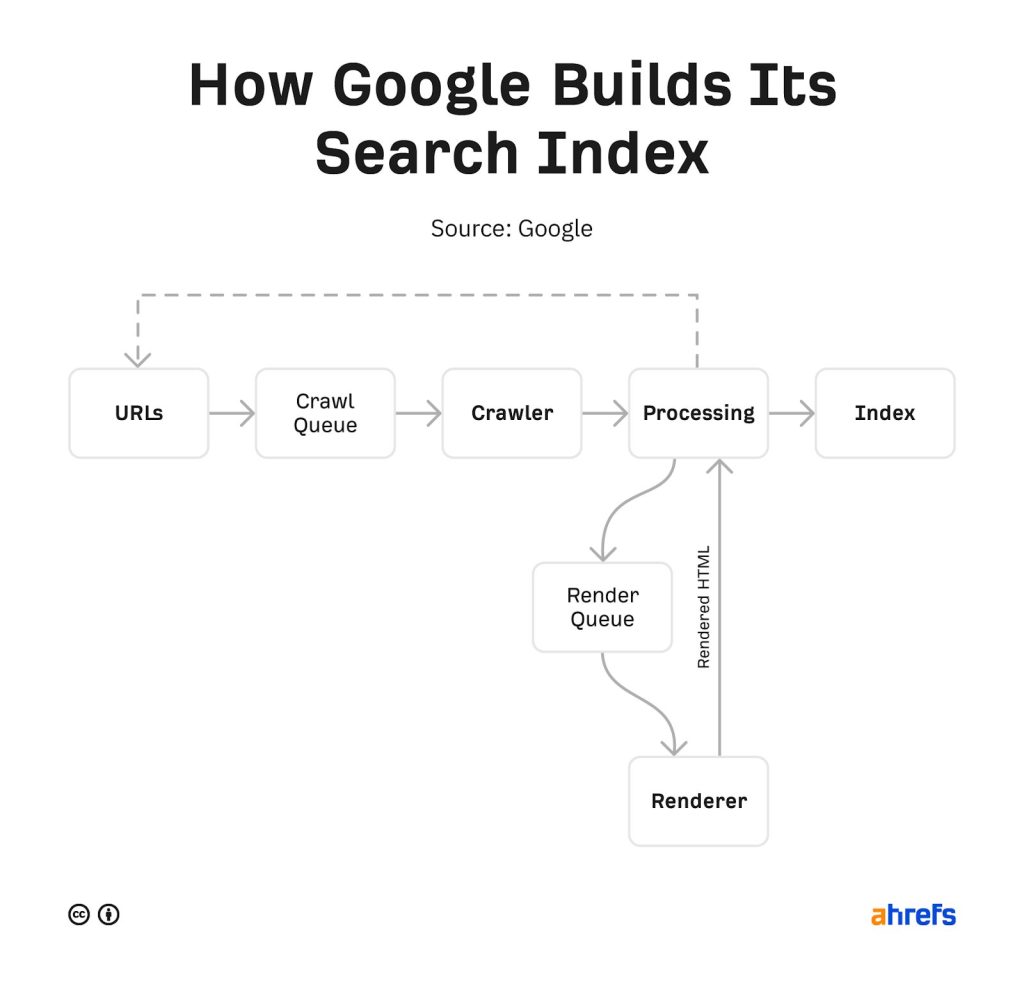

Source: AHREFS

How Do Google Bots Work?

Google Bot crawls and indexes web pages on the internet. Here’s a summary of how it works:

- Google Bot begins by crawling a friendly URL or sitemap. This information is used to locate additional URLs to crawl and index.

- When Google Bot crawls a page, it analyses its content and structure to assess its relevance and importance.

- This study considers various elements, such as keywords, meta tags, internal and external links, and other indications of content quality.

- Google Bot also checks for technical faults that may hinder the page’s crawlability or indexability, such as broken links or duplicate material.

- After analysing the page, Google Bot adds it to its index, a vast database of all the pages it has crawled and indexed.

- When a user searches in Google, the index is used to find the most relevant sites for the query, considering a variety of criteria such as the user’s location, search history, and other customisation characteristics.

- The algorithm then ranks the sites in order of relevance and significance. The results are shown to the user as a search engine results page (SERP).

Pros and Cons of Google Bots

Google bots can have advantages and disadvantages, depending on how they are employed and optimised. Here are some of the potential benefits and drawbacks of Google bots:

Pros:

- Google bots can assist website owners with increasing their online exposure and search engine rankings, resulting in more site visitors.

- Google bots assist in guaranteeing that search results are accurate, up-to-date, and relevant to users’ queries by scanning and indexing web pages.

- Google bots can detect and filter out low-quality information, spam, and other web-based abuse, improving overall web quality.

- Google bots can assist website owners in optimising their sites for better results by offering insights and data about their site’s performance and user behaviour.

- By supplying enormous amounts of training data, Google bots can aid in developing machine learning and artificial intelligence technologies.

Cons

- Google bots may need help to crawl and index complicated or badly designed websites, resulting in inadequate indexing and lower visibility in search results.

- Websites using robots.txt files or other limitations may not be indexed by Google bots, resulting in lower visibility in search results.

- In rare situations, Google bots may need to be clearer for high-quality information for spam or low-quality content, resulting in fines or worse search engine ranks.

- Suppose Google bots are not properly calibrated and controlled. In that case, they could contribute to the spread of fake news, disinformation, and other harmful content.

- Some users may be concerned about their privacy and data acquisition by Google bots, which might influence their trust and confidence.

How Do You Know if Google Bots Visited Your Site?

See the server logs to discover if Google bots have visited your website. The logs will show you the IP addresses of your website’s visitors and any Google bots that have scanned your site.

To see if Google bots have visited your site, look for user agents that match those used by Google bots in the server logs. “Googlebot” is the user agent for Googlebot.

Google Search Console may also be used to see if Google has crawled your website. Google Search Console gives information on your website’s performance in search results, such as the last time Google searched it and any crawling faults it detected.

How To Control Google Bots

You have some influence over how Google Bots interact with your website as a website owner. Here are some methods to manage Google Bots:

Make use of a robots.txt file.

A robots.txt file is a text file that tells Google Bots which pages or areas of your site should crawl and index and which they should avoid. You may use it to prevent Google from indexing pages irrelevant to search results, such as login or admin pages, or to limit access to sensitive pages, such as login or admin pages.

Use the meta tag "noindex."

The “no index” meta tag instructs Google not to index a certain page. It can be used to avoid the appearance of pages with the same material, thin content, or other low-quality content.

Make use of the "nofollow" attribute.

The “nofollow” element instructs Google not to follow links on a page. It can be used to prevent Google from indexing pages that are irrelevant to search results or have spammy or low-quality links.

Make use of Google Search Console.

Google Search Console is a free tool that lets you see how Google Bots scan and index your site. It can help you uncover crawlability, indexability, and other technical issues affecting your site’s visibility in search results.

Frequently Asked Questions

Numerous factors determine the frequency with which Googlebot scans the page. The PageRank value of the relevant page and the amount and quality of existing backlinks are critical. The load speed, structure, and frequency with which a website’s content is updated all influence how frequently Googlebot visits the site. Google may read a page with numerous backlinks every 10 seconds, but a site with few links may only be crawled for a few weeks.

Googlebot utilises an algorithm to choose which sites to crawl, how frequently to crawl them, and how many pages to get from each site. Google’s crawlers are configured to avoid exploring the site too quickly to prevent overwhelming it.

There are two ways to check the IP address: Some search engines include IP lists or ranges. You may validate the crawler by comparing its IP address to the list supplied. To connect the IP address to the domain name, use DNS lookup.

Googlebot crawls with IP addresses from countries other than the United States and IP addresses from the United States. When Googlebot seems to be from a specific nation, handle it like any other user from that country.

Conclusion

Google Bot is an important technology that aids in website optimisation and online activity. Google Bot contributes to search results’ accuracy, relevance, and timeliness by crawling and indexing webpages. As a result, it is a must-have tool for website owners who rely on their site to generate traffic, leads, and sales.

Prioritising website optimisation for Google Bot is critical for website owners who want to level up their chances of getting indexed and appearing in relevant search results. This may be accomplished by optimising the site’s structure and design, producing high-quality, relevant content, and correcting any crawling and indexing issues that may develop.

Google Bot is a necessary tool that website owners must pay attention to. Website owners may enhance their search engine ranks, boost traffic, and ultimately achieve their online goals by prioritising website optimisation for Google Bot.